Autonomous Driving, how the driver less revolution will change the world by Hermann, Brenner and Stadler provides comprehensive coverage of the revolution in mobility that is leading towards to goal of achieving autonomy in driving. Its worth noting that Autonomy is focused on the autonomy of the computer system and associated cloud entities and that systems ability to operate, control/drive a vehicle. Existing 20th century vehicles were already autonomous driving vehicles with the human driver being the entity experiencing autonomy.

Structure

The book provides significant coverage over 400+ pages, divided into 39 chapters across 10 parts. The main parts of the book are:

- Evolution and Revolutions in Mobility

- Perspectives on Autonomous Driving

- Technology of Autonomous Driving

- Arena of Autonomous Driving

- Customers and their mobility behavior

- Framework and conditions for Autonomous driving

- Impact on vehicles

- Impact on companies

- Impact on Society

- What needs to be done.

Automated driving versus Autonomous driving

Automated driving is an umbrella term that described an evolution of capabilities and functionality that begins with driver assistance systems. Autonomous driving describes an end state where the system takes over all steering, breaking, accelerating control. It achieves a level of functionality where it takes the person ‘out of the loop’ and the car is operating independently at all times and in all traffic situations.

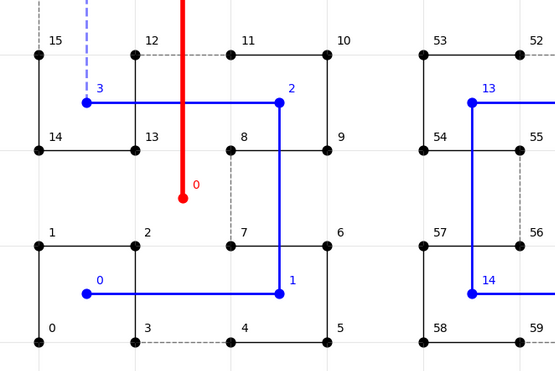

The capabilities along this spectrum is clearly laid out using a set of levels:

- 0: This is the starting point, no automation, the driver is in complete control of the vehicle without any system suopport.

- Level 1 and 2: The system takes responsibility for more of the driving tasks but the driver is obliged to permanently monitor the system, the traffic and it operating environment. In these levels the driver must be able to take over the driving function at any time.

- 3: is a state where the driver no longer needs to monitor the driving activity continuously, with the system indicating when its necessary to take control of the vehicle.

- 4: is a state where the driver has the capability to undertake all the driving functions in the normal operating environment but the drive can overrule or take over the operation of the vehicle.

- 5: is true autonomy where the system is always in control and the driver is now just a passenger. As a passenger, they cannot influence the operation of the vehicle in terms of control. The person, of course, still indicates parameters such as destination and traveling parameters (tolls/no tolls, take the scenic route etc.)

State of Art in Autonomous Driving

Autonomous driving is no longer science fiction. There are self-driving vehicles currently operating, some in normal traffic and some in controlled environments. As the developers and operators of these systems gain experience they are expanding the roles, locations and available capabilities that are being deployed into ‘the wild’.

A number of mobility use cases are enabled through autonomy. This may result in different types of self cars reaching the market:

- Robo cars,

- Busses

- and multi-purpose vehicles.

Although Autonomy is coming to the car and it is evolving into being part of a new (‘Disrupted’) ecosystem. This offers a wide variety of connected services and mobility solutions including operating in conjunction with other modes of transportation.

Autonomy and social benefits.

Currently world wide there are over 1.25 million deaths and over 50 million injuries that are associated with traffic collisions. This is a significant cost to society both in terms of human costs but also health and safety associated costs. It is estimated that way over 90% of traffic collisions can be attributed to human error. With the worldwide adoption of autonomous driving technologies and associated mobility use cases there is significant opportunities for reducing the costs to society and turning autonomy into a social benefit.

It is estimated in the US that traffic accidents and the associated repairs, hospitalization, rehabilitation and other such costs amounts to $500 billion per year. Autonomy use cases that significant reduce traffic related death, injury and damage will have a significant impact on this very significant financial cost.

Trends in Mobility.

Autonomy and mobility are closely intertwined with a number of other trends and areas that are very prominent in the 21st century, these include:

- Connectivity.

- Urbanization.

- Sustainability.

- Electrification.

- Sharing.

Life in the 21st century is embracing and driving massive changes throughout these areas, all these trends promote autonomous mobility and mobility use cases contribute positively to the acceleration of growth and adoption of these trends.